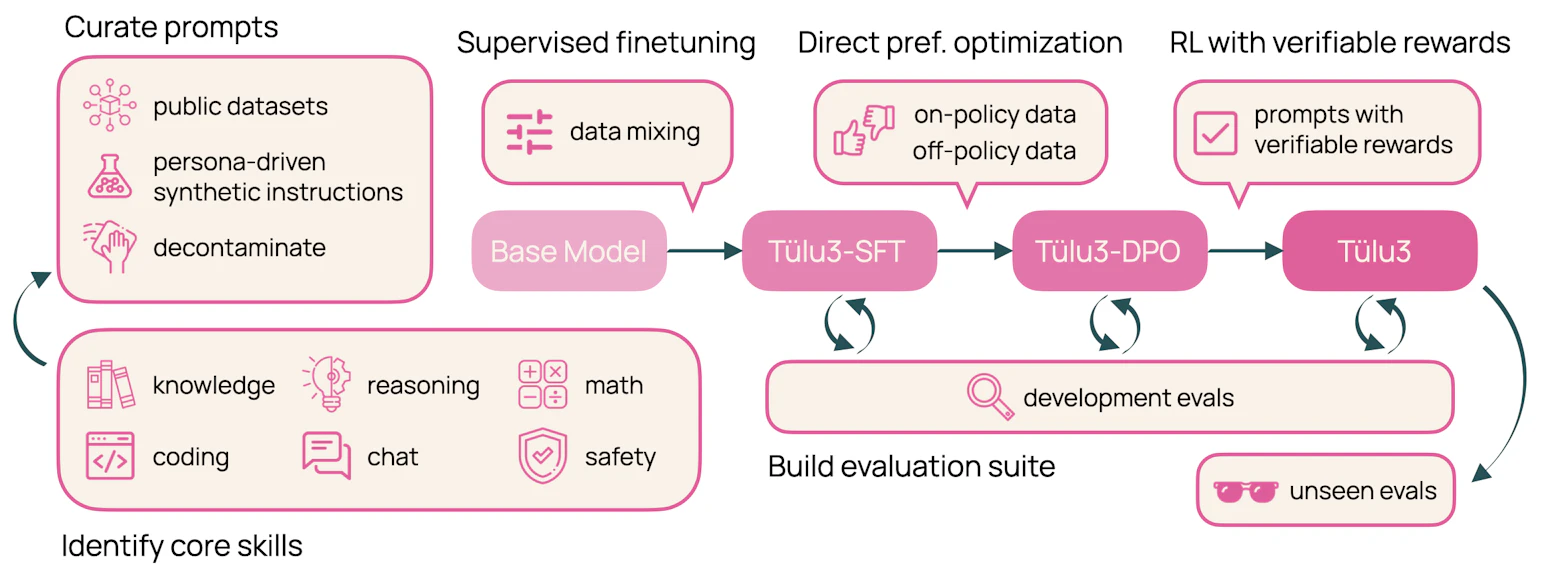

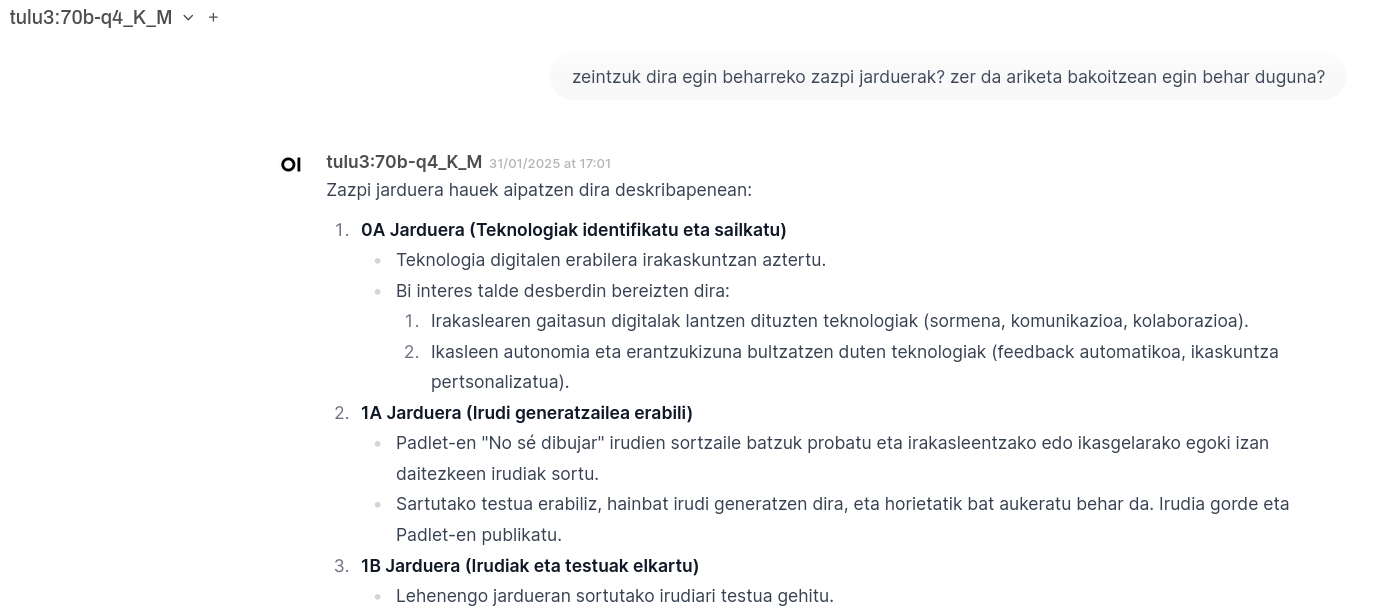

In recent months, Local LLMs have significantly improved, and some of them perform surprisingly well with Basque language. Among them, I want to highlight Tülu 3 70B, which shows good results in Basque when using the quantized version (q4_K_M). Until the Latxa instruction model becomes available, this is probably the best option for having conversations or generating text in Basque.

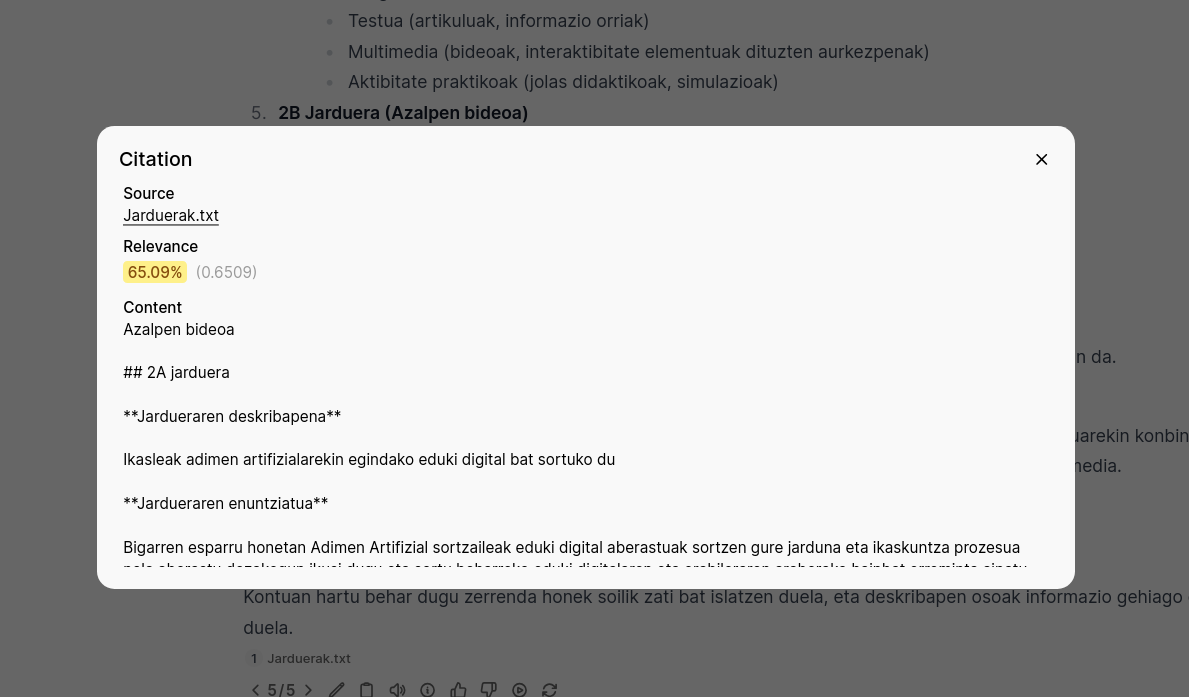

Components for setting up the RAG system

The components needed to set up a RAG (Retrieval Augmented Generation) system:

- Local LLM: Tülu-3 70B q4_K_M

- Embedding model: Snowflake/snowflake-arctic-embed-l-v2.0

- User interface: Open-WebUI

- Ollama: For managing LLMs and embedding models

Advantages

The main advantages of this system:

- Privacy: Everything runs locally, data stays on your machine

- Basque support: Tülu-3 model performs well with Basque language

- Free: All components used are open source and free

- Easy: Thanks to Open-WebUI, everything can be managed from a graphical interface

I will update this article when the Latxa instruction model is released, but for now, Tülu-3 is an excellent choice for setting up a RAG system in Basque.